We are honored to count you among the 1+ MILLION readers of our weekly newsletter. Please help grow our community by inviting your friends to subscribe.

If you’re new here, we celebrate the ways Artificial Intelligence is making our world more Human. Make sure you check out my new book and community. This newsletter is released in collaboration with The Rundown AI.

This week’s 5 top stories you can't miss:

1️⃣ Meta's smart glasses get a neural upgrade

Meta just revealed three smart glasses at Meta Connect, including Ray-Ban Display glasses that pair with a Neural Band to detect muscle signals before you visibly move, an athlete-focused Oakley line, and the Ray-Ban Meta Gen 2.

Key Takeaways:

Display glasses use the Neural Band to detect electrical muscle signals, enabling messaging, navigation, and more via subtle finger movements.

The Neural Band will also learn and personalize to the user’s unique patterns, making controlling the interface nearly imperceptible.

Gen 2 doubles battery life to 8 hours while adding 3K Ultra HD video recording and conversation focus to amplify voices in noisy environments.

The Oakley Meta Vanguard targets athletes with 9-hour battery life, water resistance, and Garmin connectivity for real-time performance metrics.

My Take:

Meta's Neural Band represents a critical inflection point in the $44 billion agentic AI market's evolution toward seamless human-computer interfaces. While enterprises are racing to deploy AI agents behind screens, Meta is pioneering the next frontier - making AI interaction as natural as thought itself. This aligns with the broader trend I'm seeing where the most successful AI implementations disappear into the background of human activity. Meta's approach also signals a shift from the current multi-agent platform wars toward embedded AI experiences.

2️⃣ YouTube ships new AI tools for creators

YouTube just dropped over 30 new creator tools at its Made on YouTube event, including AI-powered editing and clipping features, the addition of Veo 3 Fast in shorts, auto-dubbing, and more.

Key Takeaways:

Google’s Veo 3 Fast model launches free for Shorts creators, with the ability to generate 480p videos with matching audio from text prompts.

Auto dubbing expands to include lip sync tech that animates speakers' mouths to match translated audio across 20 languages.

YouTube's AI now automatically clips engaging moments from long-form videos into vertical Shorts, helping podcasters and creators repurpose content.

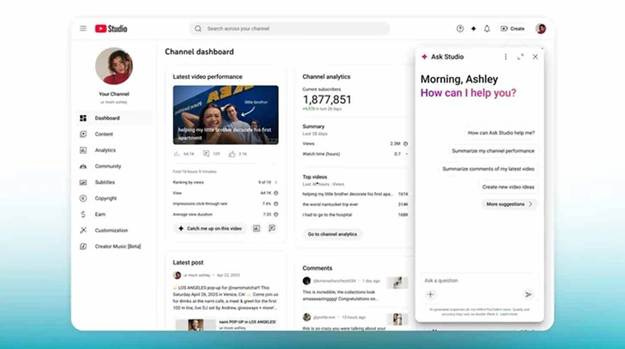

Ask Studio arrives as an analytics chatbot that answers performance questions and suggests data-driven optimization strategies for channels.

Edit with AI turns raw footage into polished first drafts, adding transitions, music, and voiceovers.

My Take:

This massive rollout reflects the shift from customizable platforms toward ready-to-use specialist agents. YouTube is creating a content creation agent marketplace, mirroring the specialized agent explosion across industries. The auto-dubbing with lip sync demonstrates multi-modal agent coordination that will become standard by 2028, when seamless collaboration between specialized agents handling different workflow aspects becomes the norm.

3️⃣ OpenAI and Anthropic reveal how millions use AI

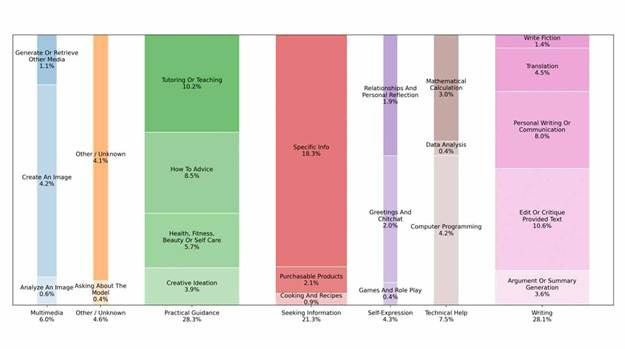

OpenAI and Anthropic both published new data on AI usage patterns across their platforms, revealing demographic shifts, geographic divides, and a growing split between personal and business applications.

Key Takeaways:

Claude users focus heavily on coding, while ChatGPT sees more writing and decision support, with users seeking advice over content creation.

Personal use of ChatGPT surged from 53% of messages in June 2024 to 73% by 2025, with non-work conversations growing faster than professional ones.

AI adoption in low and middle-income countries is growing 4x faster for ChatGPT, while Claude usage is largely concentrated in wealthy regions.

Both platforms show users delegating tasks more frequently over time, with an increase in “information seeking” and search rather than output generation.

My Take:

The shift toward personal use mirrors early enterprise software adoption patterns - individual users drive bottom-up transformation before corporate deployment. The 4x faster adoption in developing markets validates my thesis that agentic AI will democratize capabilities once exclusive to large corporations. Most significantly, the trend toward "information seeking" indicates users developing collaborative reasoning relationships rather than simple prompt-response interactions.

🔷 I’m honored to join EOT – Electronics of Tomorrow Expo 2025 in Denmark this September as a keynote speaker.

This event brings together more than 18,500 leaders, innovators, and decision-makers to explore the future of electronics and technology.

Here’s where you can find me: “Making our world more human with technology” — part of the Future Perspectives track on the geopolitical shifts shaping our industry.

Meet & Greet + Book Signing: Right after the panel.

📍MCH Messecenter Herning, Denmark 📅 September 30 – October 2, 2025

If you’ll be there, I’d love to connect in person!

4️⃣ Reve revamps creative platform with advanced editing

Reve just unveiled a newly revamped image platform that combines AI image generation, natural language editing, and drag-and-drop controls into a single freely available interface.

Key Takeaways:

The platform uses a "layout representation" system that converts images into code-like structures, enabling precise edits while preserving original images.

The ‘new Reve’ features a drag-and-drop editor that allows for granular changes to elements within both uploaded and generated images.

Reve also adds a chat box to create, blend, and edit images via natural language commands, with the ability to search the web for inspiration.

The company released API access in beta, allowing developers to integrate Reve's image creation and editing into third-party applications and workflows.

My Take:

It’s been just weeks since Google’s Nano Banana changed the image editing game, and now we’ve already had ByteDance’s Seedream 4.0 and Reve launch similar capabilities. Image models have already reached insane quality levels, but advanced editing is the next frontier that opens up completely new use cases.

5️⃣ Harvard’s AI helps reverse disease in cells

Harvard Medical School researchers just developed PDGrapher, a free AI model that pinpoints gene and drug combinations capable of transforming diseased cells back to healthy states.

Key Takeaways:

PDGrapher examines how genes, proteins, and cellular signals work together instead of testing one drug target at a time, like traditional methods.

The tool outperformed competing AI systems by 35% when tested across 19 cancer types and delivered answers 25 times faster.

Researchers validated the tool by having it predict known lung cancer treatments, which it correctly identified, along with promising new targets.

Harvard teams are using the tool to find treatments for brain diseases like Parkinson's and Alzheimer's through partnerships with Mass. General Hospital.

My Take:

Most drugs today work by hitting a single target in the body, but complex diseases often outsmart the one-trick approaches. PDGrapher's ability to find multiple pressure points simultaneously could crack diseases that have eluded treatments, while potentially saving billions typically lost on dead-end drug trials.

Which of these news stories resonates most with you?

Let us join hands to make our world more human! — Pascal

#artificialintelligence #intelligentautomation #futureofwork #AI #automation #management #technology #innovation