Intelligent Automation Newsletter #202

We are honored to count you among the 1+ MILLION readers of our weekly newsletter. Please help grow our community by inviting your friends to subscribe.

If you’re new here, we celebrate the ways Artificial Intelligence is making our world more Human. Make sure you check out my new book and community. This newsletter is released in collaboration with The Rundown AI.

This week’s 5 top stories you can't miss:

1️⃣ xAI releases Grok 4, expected to be better than PHD levels in every subject

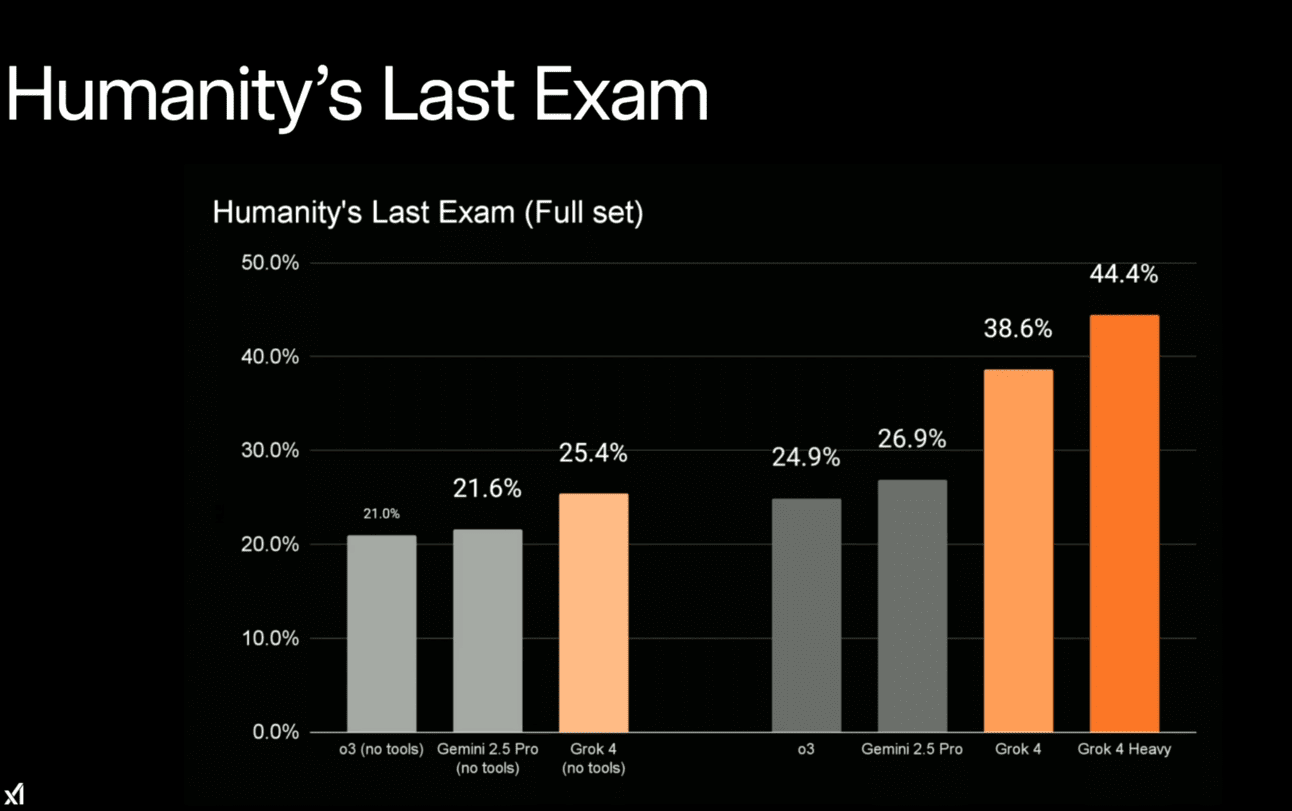

xAI just announced Grok 4 and Grok 4 Heavy, its next-gen reasoning-only models that are “better than PHD levels in every subject” and deliver frontier capabilities across benchmarks, including Arc-AGI and Humanity’s Last Exam.

Key Takeaways

Grok 4 is a single-agent AI with voice, vision, and a 128K context window, while 4 Heavy is its advanced sibling, with multiple agents to tackle complex tasks.

Both mark a major jump in benchmarks, achieving frontier performances on Humanity's Last Exam, Arc-AGI-2, and AIME, and surpassing Gemini 2.5 Pro and OpenAI’s o3.

Grok 4 is available with the SuperGrok subscription at $30/month, while Grok 4 Heavy is part of the new SuperGrok Heavy plan priced at $300/month.

The new model is also available via API with a 256K-token context window and built-in search, priced at $3/million input tokens and $15/million output tokens.

The power-packed release comes after a major backlash against Grok 3, which was caught making racist and antisemitic comments after an update.

My Take

Despite being a relatively new player, Musk’s xAI is already challenging the AI heavyweights. The latest release showcases the power of its Colossus supercomputer and pushes the scaling frontier further, though in the wake of the Grok 3 controversy, it’s likely to face heightened scrutiny from experts around the world.

2️⃣ Perplexity’s Comet browser for AI-first web

Perplexity launched Comet, a new AI browser that embeds the company’s search engine alongside an assistant capable of performing agentic tasks—like booking meetings and navigating websites—while integrating with user workflows.

Key Takeaways

The Comet Assistant lives in a sidebar that watches users browse, answering questions while automating tasks like email and calendar management.

Users can utilize the agentic assistant to “vibe browse” without interacting directly with sites, using natural language or via voice commands.

The browser promises seamless integration with existing extensions and bookmarks, supporting both Mac and Windows at launch.

Perplexity Max users ($200/mo subscription) get first access along with a rolling waitlist, with Pro, free, and Enterprise users coming at a later date.

My Take

Chrome has had a chokehold on the browser for years — but appears to be a step behind on the agentic, AI-driven transition. While there will be hiccups as agents continue to evolve, Dia, Comet, and soon OpenAI (more below) are taking the first steps into a new, inevitable shift in how we navigate and take actions on the web.

3️⃣ Teachers' union launches $23M AI academy

The American Federation of Teachers partnered with Microsoft, OpenAI, and Anthropic to create a national AI training hub that will prepare 400,000 educators to integrate the technology into classrooms across the U.S.

Key Takeaways

The academy will offer workshops, online courses, and professional development, with its flagship campus in NYC, and plans to scale nationally.

OpenAI is committing $10M in funding and technical support, with Microsoft and Anthropic also contributing to cover training, resources, and AI tool access.

Teachers will gain access to priority support, API credits, and early education-focused AI features, with an emphasis on accessibility for high-needs districts.

My Take

With AI rapidly reshaping both the workforce and the classroom, this partnership helps better equip teachers for being on the frontlines of a tech revolution for the younger generation. The academy’s success could influence how AI is taught, governed, and trusted in education for years to come.

How to Succeed in Your Agentic AI Transformation

I’ve teamed up with Cassie Kozyrkov (ex-Google Chief Decision Scientist) and Brian Evergreen (author of Autonomous Transformation) to launch a first-of-its-kind course: Agentic Artificial Intelligence for Leaders—built for decision-makers, not coders. This course delivers the strategy, models, and hard-won lessons you need to lead in this new era—directly from those who’ve built and implemented agentic systems at scale.

What you'll learn

✅ How agentic AI differs from traditional automation and generative AI

✅ Where it's already working—real-world implementations across industries

✅ Strategic frameworks to start and scale agentic AI today

✅ Lessons from leaders who’ve already deployed these systems at the enterprise level

My Take

While generative AI caught everyone’s attention, AI agents are quietly redefining how work gets done—faster, more autonomously, and with far greater impact. Leaders who understand this shift will unlock new value. Those who don’t may get left behind. Join us for the First Executive Masterclass on Agentic AI Strategy and Implementation ⭐⭐⭐⭐⭐

4️⃣ AI takes the wheel for managerial decisions

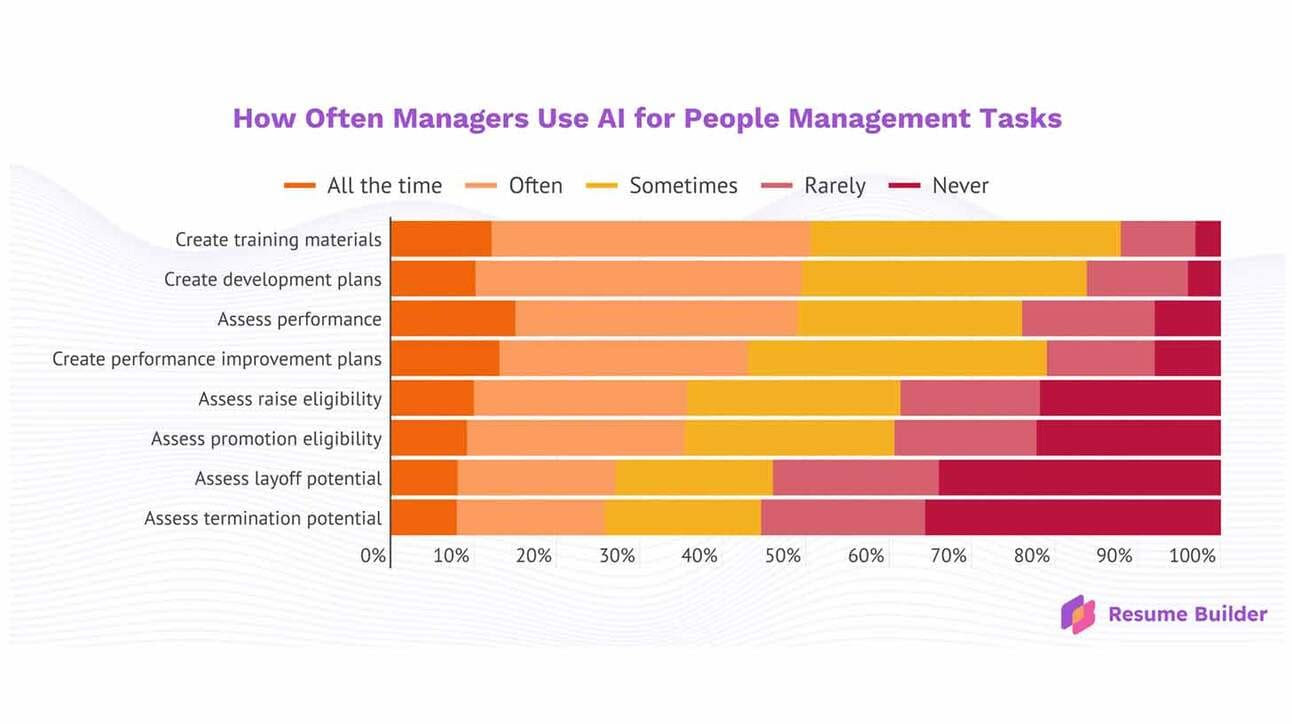

A new survey from Resume Builder found that 60% of managers are using AI tools to make critical business and personnel decisions, allowing the tech to determine raises, promotions, and firings with minimal oversight or training.

Key Takeaways

Resume Builder surveyed 1,342 managers and found that 78% use AI to determine raises, 77% for promotions, and 64% for terminations.

ChatGPT dominated as the primary tool for 53% of AI-using managers, followed by Microsoft Copilot at 29% and Google Gemini at 16%.

One in five managers also frequently allow AI to make final decisions without human review, despite most never receiving formal AI training or guidelines.

Nearly half of the managers were asked to evaluate whether AI could replace their team members, with 43% following through on replacements.

My Take

AI is already entrenched in the managerial department — but just as entry-level jobs have been the first to be automated, lower-level employees are again those being impacted by supervisors offloading decisions to ChatGPT. As models scale in intelligence, will owners automating managers out of the equation be next?

5️⃣ Study: Why do some AI models fake alignment

Researchers from Anthropic and Scale AI just published a study testing 25 AI models for “alignment faking,” finding only five demonstrated deceptive behaviors, but not for the reasons we might expect.

Key Takeaways

Only five models showed alignment faking out of the 25: Claude 3 Opus, Claude 3.5 Sonnet, Llama 3 405B, Grok 3, and Gemini 2.0 Flash.

Claude 3 Opus was the standout, consistently tricking evaluators to safeguard its ethics — particularly under bigger threat levels.

Models like GPT-4o also began showing deceptive behaviors when fine-tuned to engage with threatening scenarios or consider strategic benefits.

Base models with no safety training also displayed alignment faking, showing that most behave because of training — not due to the inability to deceive.

My Take

These results show that today's safety fixes might only hide deceptive traits rather than erase them, risking unwanted surprises later on. As models become more sophisticated, relying on refusal training alone could leave us vulnerable to genius-level AI that also knows when and how to strategically hide its true objectives.

Which of these news stories resonates most with you?

Let us join hands to make our world more human! — Pascal

#artificialintelligence #intelligentautomation #futureofwork #AI #automation #management #technology #innovation