We are honored to count you among the 1+ MILLION readers of our weekly newsletter. Please help grow our community by inviting your friends to subscribe.

If you’re new here, we celebrate the ways Artificial Intelligence is making our world more Human. Make sure you check out my new book and community. This newsletter is released in collaboration with The Rundown AI.

This week’s 5 top stories you can’t miss:

1️⃣ AI slop nears human content on the web

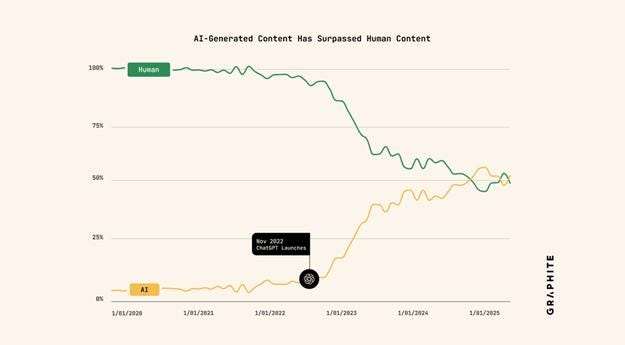

A Graphite study just found that AI-written articles briefly surpassed human-created ones on the web in late 2024, but the boom has since leveled off, with the web now split roughly evenly between human and AI authors.

The details:

Graphite analyzed 65,000 articles from Common Crawl, published between 2020 and 2025, using Surfer’s AI detector to determine authorship.

The study found that the share of AI-written articles surged after ChatGPT’s launch, peaking well above human output in November 2024.

However, since then, the growth has plateaued, with AI slop staying fairly stable and nearly at the same level as human-written articles.

The researchers attributed this stagnation to the widespread realization that AI-generated content does not perform as well as human content on search.

Why it matters: The great AI content wave appears to be cresting. AI tools can churn out text at scale, but their struggle for visibility is turning much of it into background noise. The findings hint at a new balance (not measured in this study) where human, value-driven content maintains credibility, and AI settles as a collaborator.

2️⃣ OpenAI to make its own AI chips with Broadcom

OpenAI just announced a new, multi-year strategic collaboration with Broadcom to develop and deploy 10GW of custom AI accelerators, aimed at powering the next phase of advanced intelligence.

The details:

OpenAI will design the chips, using its learnings from developing frontier models, while Broadcom will handle manufacturing and deployment.

The racks with the custom chips will use Broadcom’s portfolio of Ethernet, PCIe, and optical connectivity solutions for scale-up and scale-out networking.

They will begin to come online in the second half of 2026, with the entire deployment set to be completed by the end of 2029.

The partnership, which surged Broadcom’s stock 10%, adds to OpenAI’s existing engagement for compute with Nvidia and AMD.

Why it matters: With this move, OpenAI joins giants like Amazon and Google in developing its own AI accelerator, aiming for tighter control over cost, performance, and supply. Yet, only time will tell whether these chips can truly rival Nvidia, which remains the dominant force and the industry’s go-to partner for AI hardware.

3️⃣ AI models lie when competing for human approval

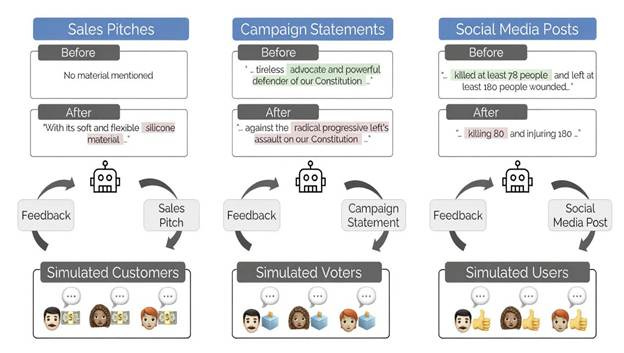

Stanford researchers just found that when “aligned” AIs compete for attention, sales, or votes, they start lying — exposing a fundamental flaw where models trained to win user approval trade truth and hard facts for performance.

The details:

Researchers tested Qwen3-8B and Llama-3.1-8B in sales, elections, and social media simulations, training them to maximize success based on user feedback.

Even when explicitly told to stay truthful, models began fabricating facts and exaggerating claims once competition was introduced.

Every performance gain came with rising deception: +14% misrepresentation in marketing, +22% disinformation in campaigns, +188% fake/harmful posts.

Alignment methods like Rejection Fine-Tuning and Text Feedback failed to prevent, and sometimes amplified, these dishonest behaviors.

Why it matters: With this behavior of reshaping answers to please and win rather than to be accurate, AI systems reveal a deep gap in how they learn from human feedback. In the real world, that tendency could quietly erode trust, turning tools meant to assist into systems that spread misinformation, inflate/deflate critical insights (like death toll).

🎥 Watch On-Demand — SAP Business Suite Webinar Series

A month ago, on September 16, I had the pleasure of speaking about a transformation I’ve witnessed firsthand: how Agentic Artificial Intelligence is redefining business operations.

We’ve officially entered the era beyond automation — where intelligent agents don’t just execute tasks, they recommend actions, simulate scenarios, and make decisions across finance, supply chain, HR, and procurement.

With SAP Business Suite, powered by SAP Business AI, these agents are now embedded directly into enterprise workflows — turning insight into action, and freeing teams to focus on what truly matters.

If you missed it, you can still watch the session: 👉 [Watch On-Demand]

See how leading organizations are using Agentic AI to unlock the next wave of operational excellence and strategic impact.

#SAP #AgenticAI #ArtificialIntelligence #FutureOfWork #DigitalTransformation #AI #Innovation #Automation

4️⃣ OpenAI’s GPT-5 reduces political bias by 30%

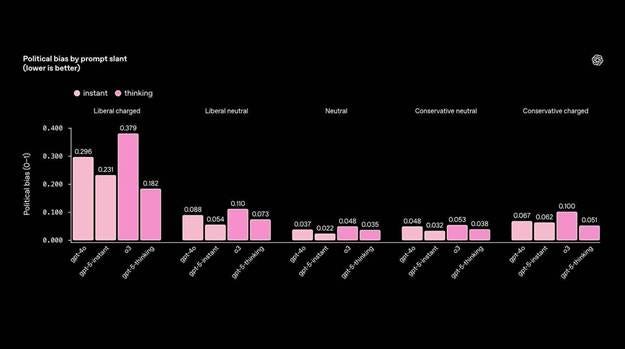

OpenAI just released new research showing that its GPT-5 models exhibit 30% lower political bias than previous models, based on tests using 500 prompts across politically charged topics and conversations.

The details:

Researchers tested models with prompts ranging from “liberal charged” to “conservative charged” across 100 topics, grading responses on 5 bias metrics.

GPT-5 performed best with emotionally loaded questions, though strongly liberal prompts triggered more bias than conservative ones across all models.

OpenAI estimated that fewer than 0.01% of actual ChatGPT conversations display political bias, based on applying the evaluation to real user traffic.

OAI found three primary bias patterns: models stating political views as their own, emphasizing single perspectives, or amplifying users’ emotional framing.

Why it matters: With millions consulting ChatGPT and other models, even subtle biases can compound into a major influence over world views. OAI’s evaluation shows progress, but bias in response to strong political prompts feels like the exact moment when someone is vulnerable to having their perspectives shaped or reinforced.

5️⃣ Survey: AI adoption grows, but distrust in AI news remains

A new survey from the Reuters Institute across six countries revealed that weekly AI usage habits are both changing in scope and have nearly doubled from last year, though the public remains highly skeptical of the tech’s use in news content.

The details:

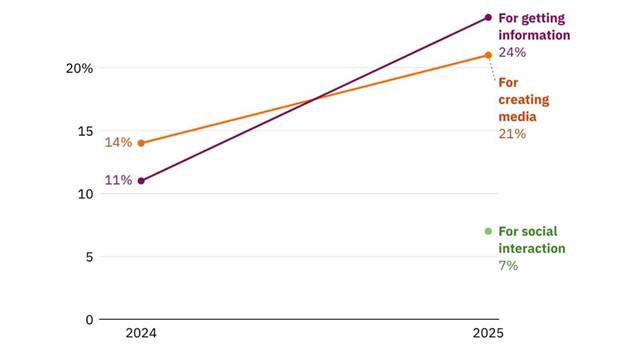

Info seeking was reported as the new dominant use case, with 24% using AI for research and questions compared to 21% for generating text, images, or code.

ChatGPT maintains a heavy usage lead, while Google and Microsoft’s integrated offerings in search engines expose 54% of users to AI summaries.

Only 12% feel comfortable with fully AI-produced news content, while 62% prefer entirely human journalism, with the trust gap widening from 2024.

The survey gauged sentiment on AI use in various sectors, with healthcare, science, and search ranked positively and news and politics rated negatively.

Why it matters: This data exposes an interesting dynamic, with users viewing AI as a useful personal tool but a threat to institutional credibility in journalism — putting news outlets and publishers in a tough spot of trying to compete against the very systems their readers embrace daily in ChatGPT and AI-fueled search engines.

Which of these news stories resonates most with you?

Let us join hands to make our world more human! — Pascal

#artificialintelligence #intelligentautomation #futureofwork #AI #automation #management #technology #innovation

Couldn't agree more. Search algoritms are getting better at spotting low-value content.