Intelligent Automation Newsletter #193

We are honored to count you among the 1+ MILLION readers of our weekly newsletter. Please help grow our community by inviting your friends to subscribe.

If you’re new here, we celebrate the ways Artificial Intelligence is making our world more Human. Make sure you check my new book and community.

This week’s 5 top stories you can't miss:

1️⃣ Tech giants push for mandatory AI education

Over 250 tech leaders and CEOs from major companies just signed an open letter urging U.S. states to offer AI and computer science courses and make the subjects mandatory graduation requirements in high school.

The details:

The letter emphasizes keeping the U.S. competitive with nations like China that already mandate AI education, and preparing students as AI "creators."

It also highlights research that a single high school CS course can increase early wages by 8% across all career paths, regardless of college attendance.

Key signatories include CEOs from Microsoft, LinkedIn, Adobe, AMD, Indeed, Khan Academy, Airbnb, Dropbox, LinkedIn, Zoom, Uber, and more.

The push coincides with President Donald Trump's recent executive order establishing a White House task force to expand K-12 AI instruction.

My take:

Just as computer and internet learning became common throughout classrooms, AI is quickly becoming a vital skill— and one that will be applicable across every aspect of life. The next generation of students will need to be AI-native, and this move looks to make sure it’s a part of the educational curriculum.

2️⃣ OpenAI retains nonprofit control in surprise move

OpenAI just announced it will maintain nonprofit control as it transitions its business arm into a public benefit corporation (PBC), marking a major reversal of its polarizing previous plans to become a fully for-profit entity.

The details:

The existing for-profit LLC will now transition into a PBC, a structure used by other mission-driven companies like Anthropic and Patagonia.

Unlike previous considerations, the founding nonprofit organization will become a major shareholder and retain governance control over the new PBC.

The move comes amid pressure from civic groups and former employees and a lengthy legal battle with Elon Musk over the original non-profit mission.

Sam Altman detailed the decision to employees, saying the move will allow OAI to secure “trillions” to deliver beneficial AGI to the world.

My take:

After months of lawsuits and protests, OpenAI is backtracking on its for-profit ambitions. The PBC looks to thread a needle between the startup’s original nonprofit mission and the massive capital needed to create AGI — though it remains to be seen what this means for capital that was reportedly contingent on a for-profit turn.

🧊[SPONSORED] JOIN ME and top business leaders at SAP's flagship event to explore their bold vision, breakthrough innovations, and real-world solutions transforming industries.

Register here for the Sapphire Virtual session or in-person in Orlando and Madrid.

Hear from industry leaders using SAP to tackle today’s biggest challenges

Get a first look at the latest updates to SAP Business Suite and Joule Agents

Dive into real product demos, advanced analytics, and intelligent apps

3️⃣FutureHouse’s ‘superintelligent’ science agents

Eric Schmidt-backed FutureHouse launched a new suite of specialized AI research agents designed for scientific discovery, aiming to tackle the information bottleneck researchers face when navigating millions of papers and databases.

The details:

The platform offers four specialized agents, Crow, Falcon, Owl, and Phoenix — all immediately accessible via web or API.

Crow handles general research, Falcon conducts deep literature reviews, Owl IDs previous research, and Phoenix specializes in chemistry workflows.

FutureHouse said the agents reach superhuman levels in literature search and synthesis, beating out both PhD researchers and top traditional search models.

The agents can access specialized scientific databases and have transparent reasoning, allowing researchers to track how they arrive at a conclusion.

My take:

Plenty of labs are pursuing similar goals, but unlike FutureHouse, only a few have a product already available. The AI science wave is coming, and the ability to synthesize vast amounts of data and reason through libraries of research will soon be embedded into every scientific workflow.

4️⃣ Apple, Anthropic partner on coding platform

Apple is reportedly partnering with AI startup Anthropic to develop a new AI-powered ‘vibe-coding’ platform that will automate writing, editing, and testing code within Apple’s Xcode software, according to a new report from Bloomberg.

The details:

Apple’s revamped Xcode will incorporate Anthropic's Claude Sonnet model, with plans to initially test the system internally before a public release.

The "vibe-coding" tool will feature a conversational interface, allowing programmers to easily request, modify, and troubleshoot code.

Apple is expected to further diversify its external AI integrations by adding Google's Gemini later this year, alongside an existing partnership with OpenAI.

My take:

Despite Apple typically favoring in-house development, the Apple Intelligence debacle may have the tech giant branching out to industry leaders to play catch-up. Apple’s massive user base likely doesn’t care whether the company has its own SOTA model — they just need an actual functioning product across the ecosystem.

5️⃣ Study questions leading AI benchmark

A new study from researchers at Cohere Labs, MIT, Stanford, and other institutions claims that LMArena, the leading crowdsourced AI benchmark, gives unfair advantages to major tech companies, potentially distorting its widely-followed rankings.

The details:

The study claims providers like Meta, Google, and OpenAI privately test multiple model variants on the Arena to publish the best performers.

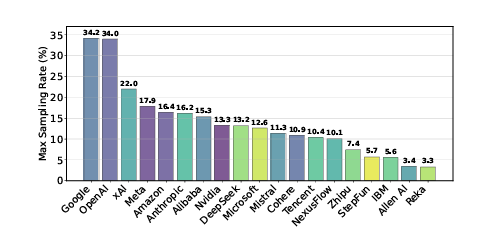

It also found that models from top labs were favored over small/open models in sampling, with Google and OpenAI receiving over 60% of all interactions.

Experiments showed that access to Arena data boosts performance on Arena-specific tasks, suggesting model overfitting rather than actual capability gains.

The researchers also noted that 205 models have been silently removed on the platform, with open-source models deprecated at a higher rate.

My take:

LMArena has disputed the study, claiming the leaderboard reflects genuine user preferences. However, these claims can damage the platform's credibility, which shapes how models are perceived. Combined with the Llama 4 Maverick benchmark debacle, this study highlights that AI evaluation isn't always as it seems.

The two posts you can't miss this week:

👉 Every single team member is a performance contributor.

👉 Not your average train ride.

Let us join hands to make our world more human! — Pascal

#artificialintelligence #intelligentautomation #futureofwork #AI #automation #management #technology #innovation